A new study by Apple researchers presents a method that lets an AI model learn one aspect of the structure of brain electrical activity without any annotated data. Here’s how.

PAirwise Relative Shift

In a new study called “Learning the relative composition of EEG signals using pairwise relative shift pretraining”, Apple introduces PARS, which is short for PAirwise Relative Shift.

Current models rely heavily on human-annotated data for brain activity, indicating which segments correspond to Wake, REM, Non-REM1, Non-REM2, and Non-REM3 sleep stages, as well as the start and end locations of seizure events, and so on.

What Apple did, in a nutshell, was get a model to teach itself to predict how far apart in time different segments of brain activity occur, based on raw, unlabeled data.

From the study:

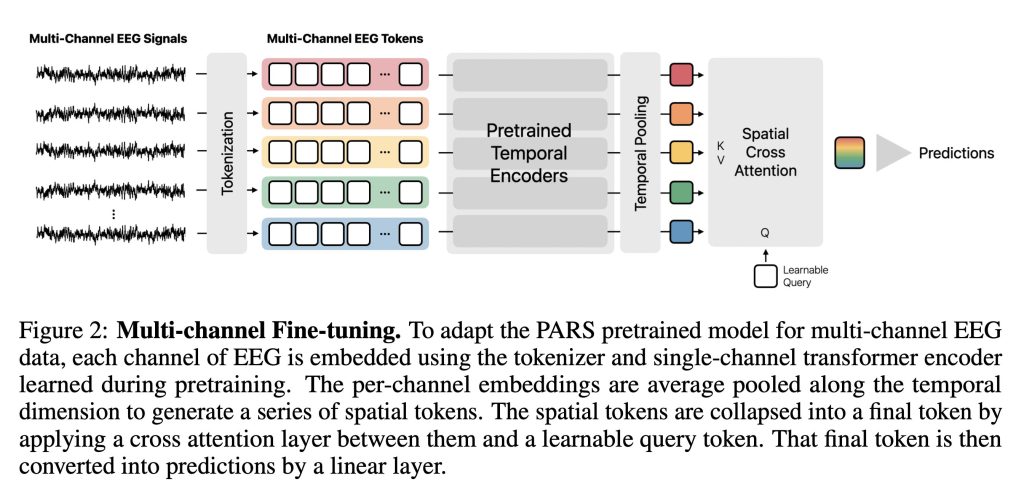

“Self-supervised learning (SSL) offers a promising approach for learning electroencephalography (EEG) representations from unlabeled data, reducing the need for expensive annotations for clinical applications like sleep staging and seizure detection. While current EEG SSL methods predominantly use masked reconstruction strategies like masked autoencoders (MAE) that capture local temporal patterns, position prediction pretraining remains underexplored despite its potential to learn long-range dependencies in neural signals. We introduce PAirwise Relative Shift or PARS pretraining, a novel pretext task that predicts relative temporal shifts between randomly sampled EEG window pairs. Unlike reconstruction-based methods that focus on local pattern recovery, PARS encourages encoders to capture relative temporal composition and long-range dependencies inherent in neural signals. Through comprehensive evaluation on various EEG decoding tasks, we demonstrate that PARS-pretrained transformers consistently outperform existing pretraining strategies in label-efficient and transfer learning settings, establishing a new paradigm for self-supervised EEG representation learning.

In other words, the researchers saw that existing methods primarily train models to fill in small gaps in the signal. So they explored whether an AI could learn the broader structure of EEG signals directly from raw, unlabeled data.

As it turns out, it can.

In the paper, they describe a self-supervised learning method of predicting how small segments of an EEG signal relate to each other in time, which can enable better performance on multiple EEG analysis tasks, from sleep stages to seizure detection.

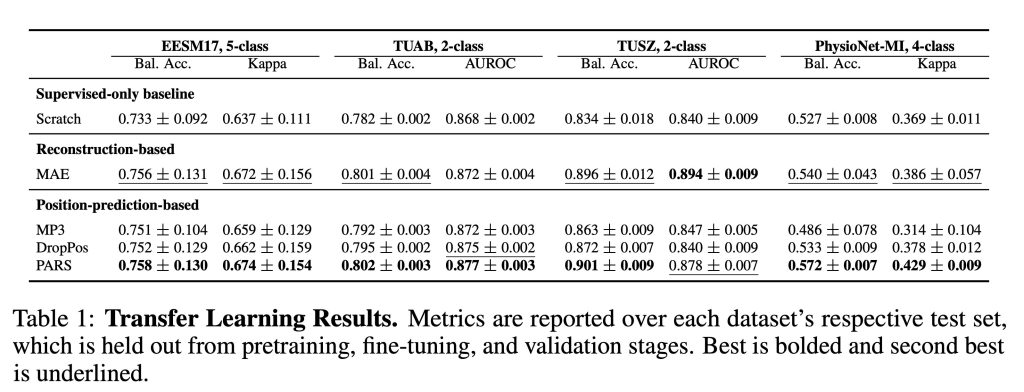

The results were promising, as the PARS-pretrained model outperformed or matched prior methods on three of the four different EEG benchmarks tested.

But what does it have to do with AirPods?

These were the four datasets used by the PARS-pretrained model:

- Wearable Sleep Staging (EESM17)

- Abnormal EEG Detection (TUAB)

- Seizure Detection (TUSZ)

- Motor Imagery (PhysioNet-MI).

In the first dataset, EESM17 refers to Ear-EEG Sleep Monitoring 2017, which contains “overnight recordings from 9 subjects with a 12-channel wearable ear-EEG system and a 6-channel scalp-EEG system”.

Here’s what an ear-EEG system looks like:

While the ear-EEG uses different electrodes from a standard scalp system, it can still independently pick up many clinically relevant brain signals, such as sleep stages and certain seizure-related patterns.

And because the EESM17 dataset was used in a study made by Apple, which has included multiple health sensors on its wearables in recent years, it’s not hard to imagine a world where AirPods get EEG sensors, much like the AirPods Pro 3 recently got a photoplethysmograph (PPG) sensor for heart rate sensing.

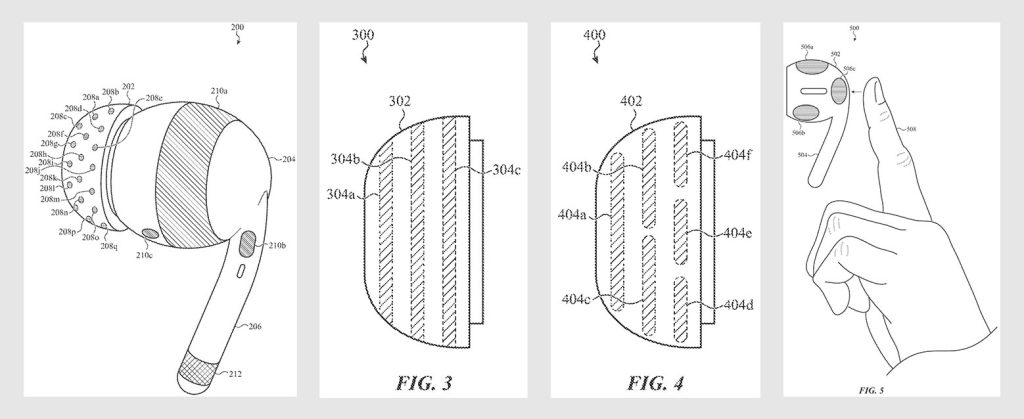

And here is the kicker: in 2023, Apple filed a patent application for “a wearable electronic device for measuring biosignals of a user.”

The patent mentions ear-EEG devices explicitly as an alternative to a scalp system, while also presenting their limitations:

“Brain activity can be monitored using electrodes placed on the scalp of a user. The electrodes may in some cases be placed inside or around the outer ear of the user. Measuring of the brain activity using electrodes placed in or around the outer ear may be preferred due to benefits such as reduced device mobility and decreased visibility of the electrodes when compared to other devices that require electrodes to be placed on visible areas around the scalp of the user. However, for accurate measurements of brain activity using an ear-electroencephalography (EEG) device, the ear-EEG device may need to be customized for a user’s ear (e.g., possibly customized for the user’s concha, ear canal, targus, etc.), and may need to be customized differently for different users, so that the electrodes placed on the ear-EEG device may remain in continuous contact with a user’s body. Because an ear’s size and shape vary from one user to another, and because a single user’s ear size and shape, and size and shape of structures such as a user’s ear canal, may change over time, even a customized ear-EEG device may fail to generate accurate measurements at times (or over time). In addition, a customized ear-EEG device may be expensive.”

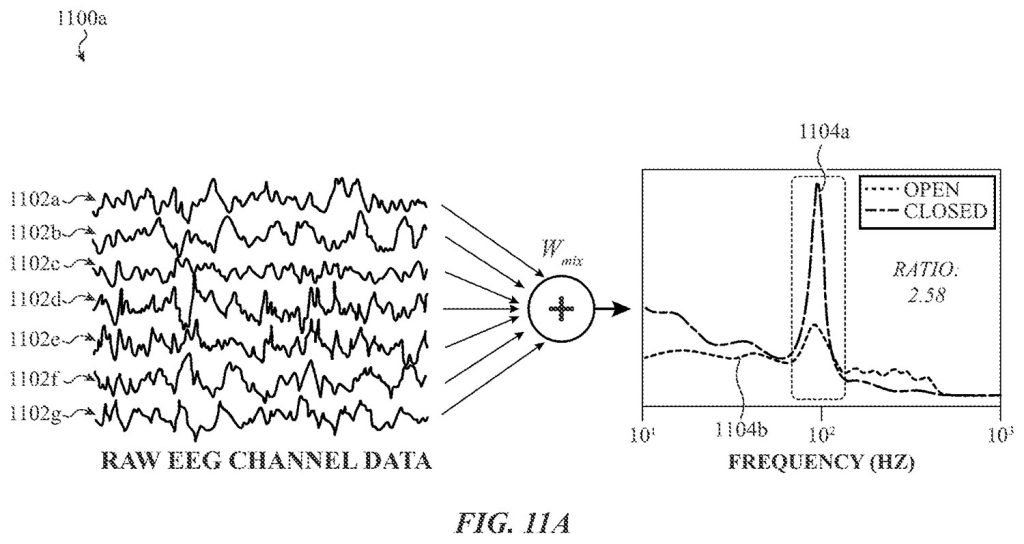

Then, Apple’s patent goes on to solve these limitations by basically packing more sensors than needed spread around the AirPods’ ear tips, and having an AI model pick up the electrodes with the best signal quality using metrics like impedance, noise level, skin contact quality, and the distance between active and reference electrodes.

Next, it assigns different weights to each electrode to combine all signals into a single, optimized waveform. The patent even describes a tapping or squeezing gesture that would start or stop measurements, as well as multiple design and engineering alternatives that would make this all possible.

Finally, Apple says that the “measurements of the biosignal, may thus be used to inform the user for various biosignal-driven use cases, such as a sleep monitoring or other anomalies, such as seizures,” which are generally the same examples from the new study.

But to be clear: the new study does not mention AirPods and has no connection to the 2023 patent application. It is an investigation into whether a model can train itself to learn to predict time intervals between brain waves from unlabeled data, using ear-EEG measurements as part of its dataset.

However, it is interesting to see Apple investigate the hardware to collect this data, as well as an AI model that would improve what happens to this data after it is collected. Whether this will actually turn into a product or feature remains to be seen.

Great Black Friday 2025 deals

- MacBook Air 13” M4: $749 (was $999)

- AirTag: $17.97 (was $29)

- AirTag (4 pack): $62.99 (was $99)

- AirPods Pro 3: $219.99 (was $249)

- AirPods 4: $69 (was $129)

- AirPods Max: $399.99 (was $549)

- Anker Nano Portable Charger (10,000mAh): $39.99 (was $59.99)

- Apple Watch Ultra 2: $599 (was $799)

- Apple Watch SE 3 (40mm): $199 (was $249)

- iPad 11” (A16): $274 (was $349)

FTC: We use income earning auto affiliate links. More.

Comments